Weni deploying LLaMa-2 models for 50% cheaper and with 6X better latency

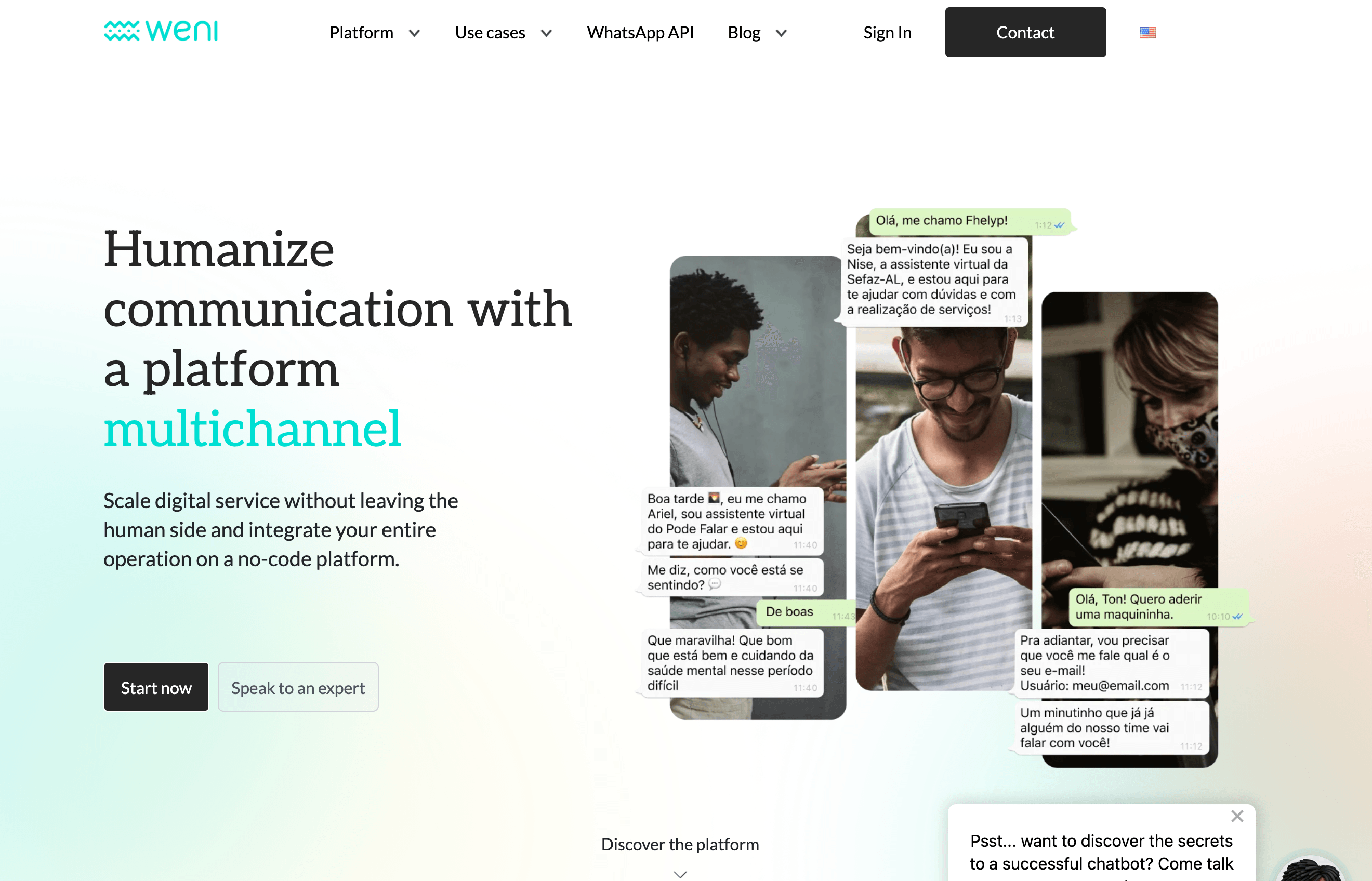

Weni is a startup (funded by the Google for Startups accelerator) headquartered in Maceió, Alagoas, that excels in the communication automation market. The Weni Platform empowers companies to enhance their interaction with customers through key communication and management channels, such as WhatsApp, social media, CRM, and ERP, as well as streamlining processes in marketing, sales, finance, and more.

With Weni’s cutting-edge technology, companies have the necessary environment to develop and manage highly customized chatbots, equipped with AI, and optimized to provide automated, human-like, and effective customer service.

Weni has a track record of making use of the latest AI advances, even before most people had even heard of ChatGPT. Weni even has a HuggingFace page full of LLaMa-2-based models created for audiences speaking Brazilian Portuguese.

Weni noticed that a lot of its users were inputting large bodies of text, and demanding hundreds of tokens in response. Since low latency is a crucial part of user experience, Weni wanted to bring down the latency for generating paragraphs of text down to under 5 seconds.

To tackle these challenges, Weni sought a specialist in Large Language Models (LLMs), specifically those used in chatbots and coding assistants. For most LLM companies, the latency issue is solved by enabling token streaming. Since Weni’s users were using services like Whatsapp to interact (where the message comes all at once or not at all), this streaming solution wasn’t going to cut it. Weni really needed to get the latency down to under 5 seconds.

The selected expert, an ex-Google ML engineer who had previously worked on core ML research with FOR.ai (an AI research consortium created by the Cohere founder Aiden Gomez), had experience replicating the results of models like GPT-4 with locally-hosted models, especially in identifying performance boosts suitable for commercial deployment.

Speeding up the inference of large language models like those of the LLaMa family posed a few interesting challenges to engineer around:

Size of LLaMa-2-70B (it’s HUGE): Even though the goal is reducing the memory footprint, LLaMa-2-70B is still a gigantic language model. For the multi-gigabyte files involved in storing it, there needed to be ways of ensuring the uploading, downloading, and processing of the files would not create bottlenecks for any other part of the project.

GPU-driver-specific behaviors: There have been a lot of optimizations for running models on A100s, and by extension the H100s. A lot of the benchmarks have also been using latest-generation tech like the A100s. It was quite possible that some of these would come at the expense of optimizing performance on the RTX A6000. It’s an unfortunate fact that many LLM performance optimizations are hardware-specific. For example, did you know that by using pytorch2+cu118 with ADA hardware you can get a 50%+ speedup? If you’re not using ADA hardware you might not know this, because this performance boost appears to be specific to just that CUDA+GPU combo. Also on that note, let’s not forget the propensity of CUDA to cause untold debugging hours.

Mutual exclusivity of Performance Boosts: This investigation started out with a long list of technologies to look into for speeding up LLM inference. One might think that getting the desired latency is just a question of combining all these together for a multiplicative effect. It’s not always that simple in practice, as many of the techniques were mutually exclusive with others (e.g., one type of inference server might require a component that a specific type of quantization works by doing away with).

Network Issues: The intent is not to host these models on a users’ device. Therefore cloud compute resources were used, which therefore meant considering how the choice of cloud network resources could negatively impact latency.

When it came to speeding up LLaMa-2-70B models, we looked at a few categories of speedups

Quantization: Quantization is a technique for representing the information contained in neural networks (e.g., the weights and biases) in lower precision. This does a lot to reduce the memory overhead, and can even mean hosting a model with 70 billion parameters on a smaller GPU. It’s important to note that quantization mainly affects memory and does not guarantee inference speedup (in fact in many cases, increased inference times might be the cost of the reduced memory footprint). Fortunately, there were plenty of ways for Weni to have its cake and eat it too. Activation Aware Quantization (AWQ) is one such quantization technique that can also speed up inference (the authors claim a 1.4x speedup over GPTQ). In order to make sure the most is being made of the GPU hardware, it’s also important to couple this with NVIDIA APEX.

vLLM: HuggingFace’s Text Generation Inference is great for a lot of use-cases, but inference can still be disappointingly slow (even on expensive hardware). Fortunately, a technique called vLLM provides a way of speeding up LLM inference using a system called PagedAttention to more efficiently manage the keys and queries during inference.

Doing more with smaller model size: Making LLaMa-2-70B models faster is definitely the instrumental goal, but the ultimate goal is making sure the users of Weni’s chatbots (including the RAG users) are happy. If a smaller model can get the same quality results to the customer as a 70B model, but faster, then the smaller model should by all means be used. Not only was this show to be possible with the release of Mistral-7B, but the successor Zephyr-7B showed it was also possible for a smaller model to beat LLaMa-2-70B on retrieval augmented generation (RAG).

A typical user query for a 70B model might have taken in 512 tokens and produced 313 output tokens.

Thanks to the efforts described above, this process is now done in 1/6th of the time it would have taken on HuggingFace Text Generation Inference.

Because of the reduction in memory overhead from using quantization like AWQ, this allows Weni to use cheaper GPUs like the RTX A6000 instead of the A100s.

When Weni is scaling these chatbots up to a million users, this will mean a lot of savings.

You can learn more about Weni on their website (where you can subscribe to their newsletter for product updates), LinkedIn, Twitter, Instagram, and YouTube.