Before BitTensor reached $420M+ market cap, we helped them go from 0 to 1

In the ever-evolving landscape of machine learning, BitTensor is one of the leading pioneers. BitTensor was founded by Jacob Steeves, an ex-Google engineer, and Ala Shaabana, Ph.D., a Post-doc at the University of Waterloo (yes, the same University of Waterloo where Vitalik Buterin went to school), who were on a mission to revolutionize distributed machine learning training systems.

Their project revolved around creating a robust protocol for distributed training over multiple organizations, presenting a radical new approach to the design of distributed machine learning systems. But in order to bring this ambitious vision to life, they needed the right talent on their side.

To handle the challenge, BitTensor teamed up with one of 5cube Labs’ members: an ex-Google machine learning engineer, who also had an extensive background in the world of cryptocurrencies and experience in programming smart contracts and web3 apps.

“Our consultant came with a unique combination of machine learning, cryptocurrency, and federated learning experience that made them an ideal fit for this project,” explains Jacob Steeves, Co-Founder of BitTensor. “Their ability to navigate the complex landscape of machine learning research and their hands-on experience in the crypto world were indispensable.”

The project’s goal was to establish a distributed training mechanism that would function across multiple organizations. Unlike most distributed machine learning training systems, the proposed protocol needed to be robust against collusion and facilitate a fair and efficient market for AI models.

The AI models, or “intelligence systems,” were to interact over the internet to determine each other’s value, similar to a market. Higher-ranking models would gain more influence and token rewards. To prevent collusion, the system proposed a protocol that would reward trusted models more, making the network resistant to up to 50% collusion.

To address these challenges, the consultant combined their expertise in machine learning and cryptocurrencies:

Collaborative Learning: The protocol was designed to facilitate collaborative learning across AI models, fostering a dynamic, interactive environment for training.

Collusion-Resistant System: The system was built to be resistant to collusion, with trusted models being rewarded more to maintain fairness.

Ranking and Reward Mechanism: Higher-ranking models were designed to gain more influence and token rewards, incentivizing models to improve their performance.

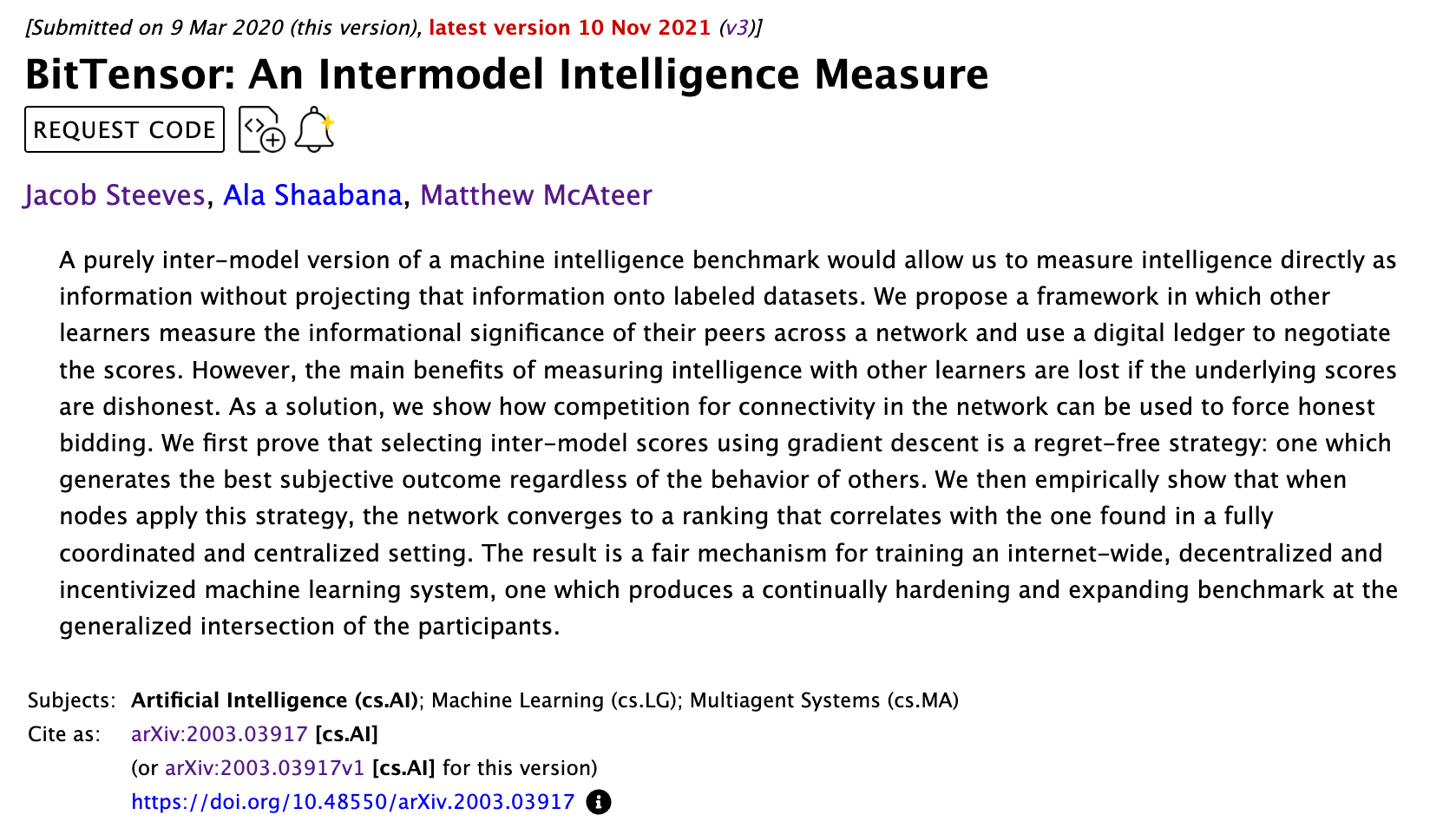

The project culminated in an ArXiv paper (papers published on ArXiv require endorsement from existing academics on the platform) that offered a fresh take on distributed training systems. It proposed a groundbreaking approach to enabling AI models to interact, learn from each other, and rank each other’s value.

BitTensor’s pioneering project evolved from an ArXiV paper published during the early days of the COVID-19 pandemic to a full-fledged foundation maintaining the BitTensor software. Several AI apps have been developed on top of BitTensor, including chatbots Hal and Reply Tensor, as well as image-text multimodal models.

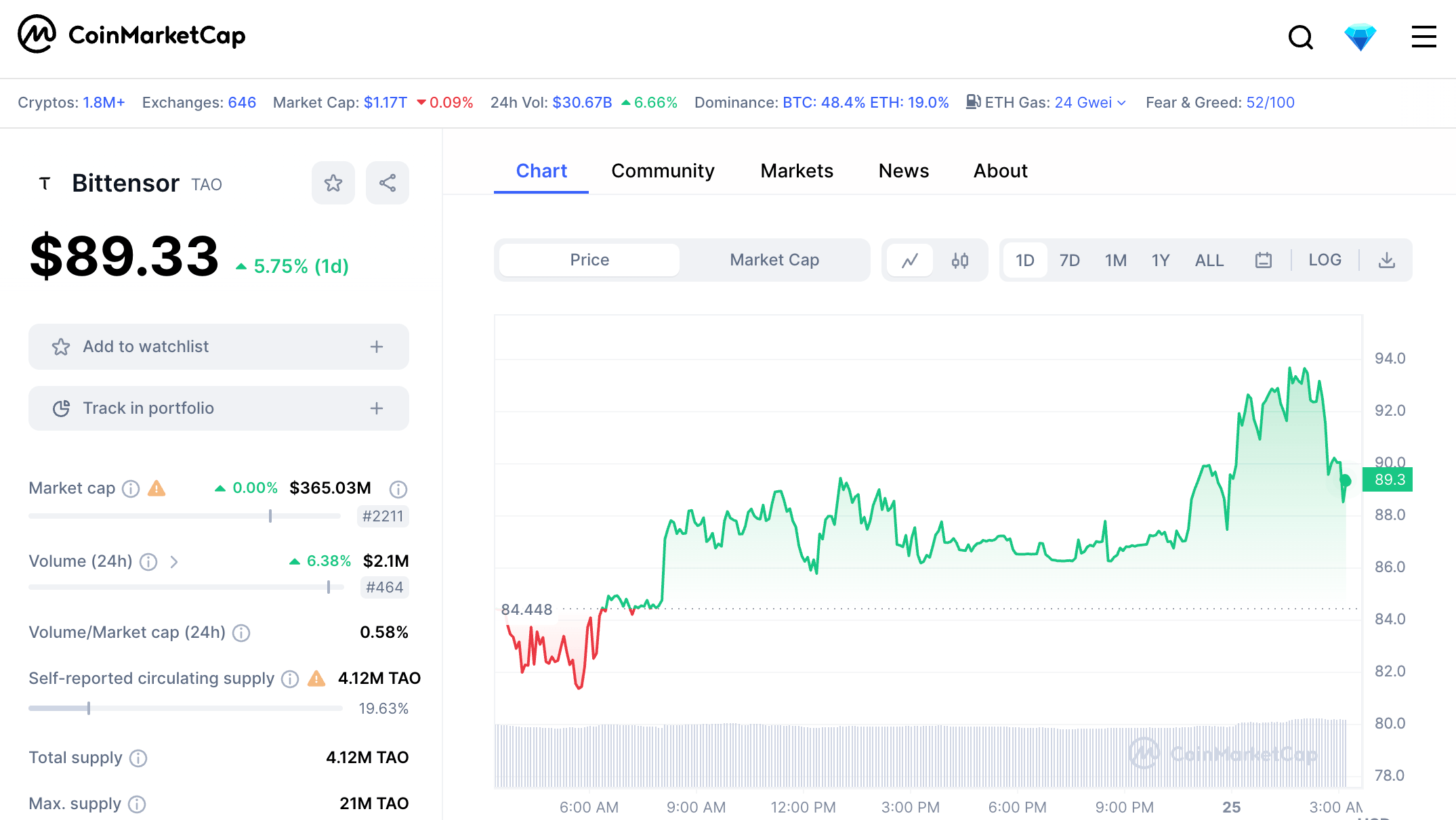

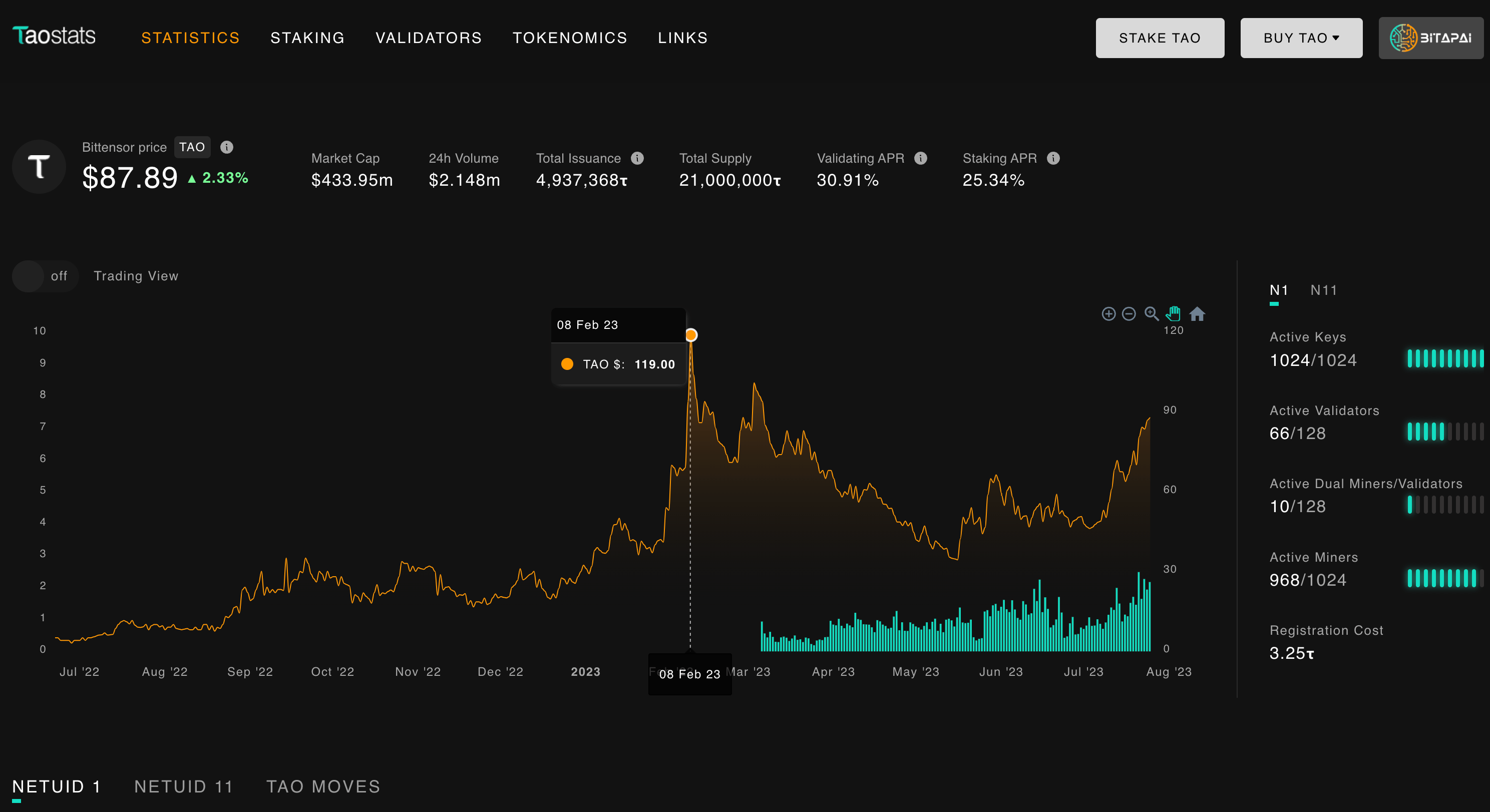

OpenTensor’s open-source BitTensor code has reached nearly 400 stars on Github. The Bittensor community on Discord has more than 12,000 members. The BitTensor network’s $TAO token, which was introduced as part of the system, reached a valuation of $119.00 on February 8, 2023, and as of July 24, 2023, had a market cap of $417.40 million.

As BitTensor continues to break new ground, it remains committed to pushing the boundaries of what’s possible in distributed machine learning, reshaping the landscape of how large AI models are trained.